Politics

How to Protect Yourself from AI Election Misinformation

As the 2024 race for President heats up, so too does concern over the spread of AI misinformation in elections.

“It’s an arms race,” says Andy Parsons, senior director of Adobe’s Content Authenticity Initiative (CAI). And it’s not a fair race: the use of artificial intelligence to spread misinformation is a struggle in which the “bad guys don't have to open source their data sets or present at conferences or prepare papers,” Parsons says.

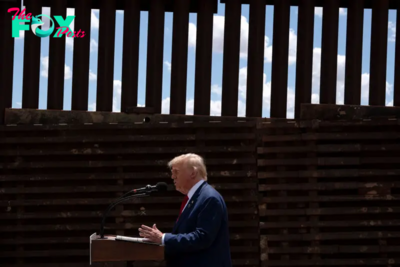

AI misinformation has already become an issue this year. Pop sensation Taylor Swift endorsed Vice President Kamala Harris after former President Donald Trump posted a fake image of her endorsing him. “It really conjured up my fears around AI, and the dangers of spreading misinformation,” Swift stated in the Instagram caption of her Harris endorsement.

The Swift incident isn’t the first instance of AI misinformation this election cycle. Florida Governor and then Republican presidential candidate Ron DeSantis posted a campaign video in June 2023 including apparently fake images of Trump hugging former National Institute of Allergy and Infectious Diseases director Anthony Fauci. Earlier this year, a political consultant utilized artificial intelligence-generated robocalls mimicking President Joe Biden’s voice to voters ahead of New Hampshire’s presidential primary, suggesting that voting in the primary would preclude voters from casting ballots in November.

A new study by Parsons and the CAI, published Wednesday, found that a large majority of respondents (94%) are concerned that the spread of misinformation will impact the upcoming election, and 87% of respondents said that the rise of generative AI has made it more challenging to discern fact from fiction online. The data was collected from 2,002 responses from U.S. citizens, all of whom were 18 and older.

“I don't think there's anything that 93% of the American public agrees on, but apparently this is one of them, and I think there's a good reason for that,” said Hany Farid, professor at the University of California, Berkeley and advisor to the CAI. “There are people on both sides creating fake content, people denying real content, and suddenly you go online like, ‘What? What do I make of anything?’”

A bipartisan group of lawmakers introduced legislation Tuesday that would prohibit political campaigns and outside political groups from using AI to pretend to be politicians. Multiple state legislatures have now also introduced bills regulating deepfakes in elections.

Parsons believes now is a “true tipping point” in which “consumers are demanding transparency.” But in the absence of significant laws or effective technological guardrails, there are things you can do to protect yourself from AI misinformation heading into November, researchers say.

One key tactic is to not rely on social media for election news. “Getting off social media is step number one, two, three, four, and five; it is not a place to get reliable information,” says Farid. You should view X (formerly Twitter) and Facebook as spaces for fun, not spaces to “become an informed citizen,” he says.

Since social media is where many deepfakes and AI misinformation disseminate, informed citizens must fact check their information with sites like Politifact, factcheck.org, and Snopes, or major media outlets, before reposting. “The fact is: more likely than not you're part of the problem, not the solution,” Farid says. “If you want to be an informed citizen, fantastic. But that also means not poisoning the minds of people, and going to serious outlets doing fact checks.”

Another strategy is to carefully examine the images you’re seeing spread online. Kaylyn Jackson Schiff and Daniel Schiff, assistant professors of political science at Purdue University, are working on a database tracking politically-relevant deepfakes. They say technology has made it harder than ever for consumers to be proactive and spot the differences between AI-generated content and reality. Still, they have found in their research that many of the popular deepfakes they study are “lower quality” than real photos.

“We'll go to conferences and people say, ‘Well, I knew those were fake. I knew that Trump wasn't meeting with Putin.’ But we don't know if this works for everybody,” Daniel Schiff says. “We know that the images that can be created can be super persuasive to coin-flip levels of accuracy detection by the public.” And as the technology advances, that’s only going to become more difficult, and the strategy may “not work in two years or three years,” he says.

To help users evaluate what they’re seeing online, the CAI is working to implement “Content Credentials,” which they describe as a “nutrition label” for digital content, carrying verifiable metadata like the date and time the content was created, edited, and signaling whether and how AI may have been used. Still, researchers agree that reestablishing public faith in trusted information will need to be a multifaceted effort—including regulations, Technology, and consumer media literacy. Says Farid: “There is no silver bullet.”

-

Politics12h ago

Politics12h agoAmericans agree politics is broken − here are 5 ideas for fixing key problems

-

Politics12h ago

Politics12h agoGraduate students explore America’s polarized landscape via train in this course

-

Politics17h ago

Politics17h agoICC arrest warrants for Israel’s Netanyahu and Hamas leader doesn’t mean those accused will face trial anytime soon

-

Politics17h ago

Politics17h agoDenmark’s uprooting of settled residents from ‘ghettos’ forms part of aggressive plan to assimilate nonwhite inhabitants

-

Politics21h ago

Politics21h agoPolice Report Reveals Assault Allegations Against Pete Hegseth

-

Politics1d ago

Politics1d agoWhy Trump Actually Needs Mexico

-

Politics1d ago

Politics1d agoMan Convicted of Killing Laken Riley Sentenced to Life in Prison Without Parole

-

Politics1d ago

Politics1d agoAmericans agree more than they might think − not knowing this jeopardizes the nation’s shared values