Politics

Bill would criminalize 'extremely harmful' online 'deepfakes’

Newly introduced legislation seeks to protect individuals nationwide from being misrepresented by certain kinds of digital content known as "deepfakes," a category that includes deceptive political messages, computer-generated sexual abuse material and more.

"We know that weaponized deception can be extremely harmful to our society," said Rep. Yvette Clarke, D-N.Y., who authored the proposal and introduced it last week in the House, reviving a proposal she previously put forth in 2019. "This bill is meant to take us into the 21st century and establish a baseline so we can discern who is intending to harm us."

Clarke told ABC News that her DEEPFAKES Accountability Act would provide prosecutors, regulators and particularly victims with resources, like detection technology, that Clarke believes they need to stand up against the threat posed by nefarious deepfakes.

AI-generated content has exploded in recent years due to the accessibility and ease of use of generative tools. Generative AI is a type of artificial intelligence capable of producing content including text, images, audio and video with a simple prompt.

As recently as a few years ago, a user would have needed a certain level of technical skill to use AI to make content, but now it's just a matter of downloading an app or clicking a few buttons on a website.

MORE: Amid spread of AI tools, new digital standard would help users tell fact from fiction

While some uses of the Technology are positive, such as in helping automate tasks, experts have pointed to a number of other ways in which the tools are being used for illicit purposes -- from phone scams and online extortion to the creation and distribution of deepfakes, which can be impersonations of public figures or even false, sexual images based on someone's likeness.

Clarke's proposed regulation also comes ahead of a presidential election in which accessibility to generative-AI tools is giving candidates or their supporters the ability to produce real-looking fakes in order to advance partisan messages.

"We are already seeing [political groups] using generative AI to try to harm their opponents," University of California, Berkeley, computer science professor Hany Farid told ABC News.

"It's so easy to do," Farid warned.

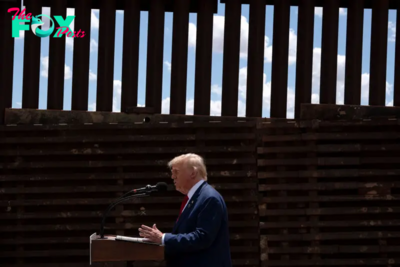

And the examples have already started piling up -- from images falsely depicting what appears to be President Joe Biden in a Republican Party ad to an outside political group supporting Florida Gov. Ron DeSantis' White House bid using AI Technology to fabricate former President Donald Trump's voice.

Google announced earlier this month that it will require all verified election advertisers to clearly disclose any use of synthetic media that makes it appear as if a person is saying or doing something they didn't say or do. Additionally, any ads that use AI to alter footage of real events or generate a realistic scene must be clearly labeled, according to the platform.

At this time, there is no national legislation specifically addressing deepfakes or deceptive uses of generative AI, but earlier this month the nation's biggest technology executives convened in a closed-door meeting with U.S. senators to discuss possible government regulation.

Clarke's bill was originally created to focus on people who find themselves misrepresented in a deepfake, a senior aide to her told ABC News.

If passed, the legislation would require creators to label all deepfakes uploaded to online platforms and make transparent any alterations made to a video or other type of content.

Under Clarke's bill, users who fail to label "malicious deepfakes" would face a criminal penalty including prison time and fines. This category would encompass deepfakes related to sexual content, criminal conduct, incitement of violence and foreign interference in elections.

"This sends a signal to bad actors that they won't get away with deceiving people," Clarke said.

All other types of deepfakes would be subject to civil penalties, including a private right of action like suing for damages.

MORE: Sharing photos of your kids? Maybe not after you watch this deepfake ad

The legislation also seeks to address harms caused by nonconsensual deepfake pornography, which is often described by experts as image-based sexual abuse.

Experts say there's an entire commercial industry that thrives on creating and sharing digitally created content of sexual abuse, including websites that have hundreds of thousands of paying members. Few resources exist for victims who find their likeness being used and manipulated in this way.

It's unclear how quickly others in the House might take up Clarke's bill or if it will garner sufficient support to pass. Because of the limitations it places on content, Clarke knows that it could also be subject to legal challenges under the First Amendment. But she says she believes the text, which was drafted with legal advice, is sound.

Some experts like Henry Ajder, who has previously mapped the use of deepfakes online, believes the scope of the bill may be too narrow.

"It is good to see legislation that is trying to capture harms caused by deepfakes and synthetic media," Ajder said. "But this specific framing potentially misses a whole range of synthetic media that isn't just about human subjects -- like the fake image of the Pentagon ablaze that caused the financial markets to" briefly dip earlier this year.

Clarke's bill would further require all creators of deepfakes to include content credentials -- that is, the origins and entire history of a piece of content, including how it was captured and how it was changed. Under the legislation, online platforms that host generative AI content would also be required to display the origins of that content.

As previously reported by ABC News, content credential technology allows creators to record any changes made to a piece of content and display it when published to increase transparency and promote authenticity.

A coalition of companies, working together as the Content Authenticity Initiative, has been developing a way for this technology to be used by any digital platform that wishes to incorporate it into their systems. They hope this new standard will restore trust in what users see online.

MORE: Video Deepfakes: How to spot AI-generated images

Some social media platforms are already moving in the direction of labeling all AI-generated content. Last week, TikTok announced it was launching a new tool that will help creators label their AI-generated content.

The social media platform says it does not allow synthetic media of public figures if the content is used "for endorsements or violates any other policy."

"I think our regulators are asking a lot of good questions, and they're having hearings, and we're having conversations and we're doing briefings and I think that's good. And I think a lot of this is creating public awareness," said Farid, the computer science professor. "I think that's a necessary but not sufficient step. I think we have to now act on all of this."

-

Politics57m ago

Politics57m agoWhy Trump Actually Needs Mexico

-

Politics57m ago

Politics57m agoMan Convicted of Killing Laken Riley Sentenced to Life in Prison Without Parole

-

Politics2h ago

Politics2h agoAmericans agree more than they might think − not knowing this jeopardizes the nation’s shared values

-

Politics2h ago

Politics2h agoRed flag laws are still used in Colorado’s Second Amendment sanctuaries, just less frequently

-

Politics6h ago

Politics6h agoHow the Biden Administration Protected Abortion Pill Access—and What Trump Could Do Next

-

Politics6h ago

Politics6h agoWhy Trump’s Tariffs Could Raise Grocery Prices

-

Politics17h ago

Politics17h agoThe First Trans Member of Congress Expected Pushback Like Mace’s Bathroom Rule

-

Politics17h ago

Politics17h agoNew York Prosecutors Oppose Dismissing Trump’s Hush Money Conviction